CI/CD pipelines are notoriously opaque as to what exactly takes place inside. Even if you’re the one who wrote the YAML config file (the pipeline list of instructions) how can you be sure that everything takes place exactly as described? Worse, most pipelines are completely ephemeral so even if something bad happens there are no traces left afterward.

Put simply, a pipeline is an automated way to test, build, and publish your software, app, or artifact. Such pipelines are getting more common and more complicated all the time. Pipelines work great in getting teams to work faster, to give more consistent and predictable results when producing their software artifact. The importance of automating these processes becomes even clearer when you consider that bigger companies could have hundreds of orchestrated pipelines that are dependent on each other so it’s vital that everything is running smoothly and without fail.

As the final link in the development process between the developers and the final user or client, I feel that there is not enough focus on how such automated processes could be used as potential attack vectors. Accessing a build pipeline could enable malicious actors to not only penetrate the producing company’s system but also to potentially modify the resulting artifact in such a way as to affect all future users thereby creating a huge blast radius often described as a software supply chain attack.

In a previous article, we discussed the principles that should guide you in securing your CI/CD pipeline. In this article, I’ll cover some of the more common potential weak points in a CI/CD pipeline and offer some remediation options. For our purposes it doesn’t matter which automating tools or systems you’re using – the security principles are still valid, you just need to find the right tool for the job of securing that section of your pipeline.

Mastering the Art of CI/CD: The Key Elements You Can’t Ignore

Different pipelines have different elements and are using different tools. The elements I chose to focus on are relevant to almost any pipeline so securing these elements can be considered a best practice no matter your SCM, tooling, or existing security setup.

Secret Management – secrets are usually strings or tokens used to connect your software or pipeline to other resources. A common example is the API keys used to connect your code to AWS resources like an S3 bucket. Most people already know that they should keep those secrets hidden and not include them as plain text in an open repository. Things are a bit more complicated inside a CI/CD pipeline. Usually, the pipeline needs access to these secrets to access the resources and information they represent. That means that anyone with access to what is happening inside your pipeline could potentially see and copy your secrets. One way to keep your secrets safe even inside your pipeline is to use a secrets management tool like Hashicorp vault. Such tools can not only obfuscate your secrets even inside your pipeline but they make it much easier to rotate your secrets so that you can regularly change them making stealing secrets from a pipeline worthless. Regardless of the secrets management tool you choose to employ rotating your secrets regularly can be considered a good security practice to have.

Poisoned Pipeline Execution (PPE) – Poisoned pipeline execution (PPE) is a technique that enables threat actors to ‘poison’ the CI pipeline – in effect, change the pipeline steps or their order as defined in the pipeline instruction file. The technique abuses permissions in source code management (SCM) repositories to manipulate the build process. It allows injecting malicious code or commands into the build pipeline configuration, poisoning the pipeline to run malicious code during the build process. Unless you verify the pipeline instruction file before each build you’ll most likely be in the dark as to the fact that your builds are no longer running as you have specified. Even a minuscule change such as calling one library over another can have far-reaching effects such as including backdoors or crypto miners in the final product.

One of the ways to avoid PPE is to verify that the pipeline instruction file is unmodified. You can cryptographically sign the file and add the signature verification as a first step of every pipeline. A tool you can use to sign and verify files is Valint, a tool published by Scribe Security. Whatever sign-verify tool you end up using, the idea is to make sure your instruction file integrity remains intact.

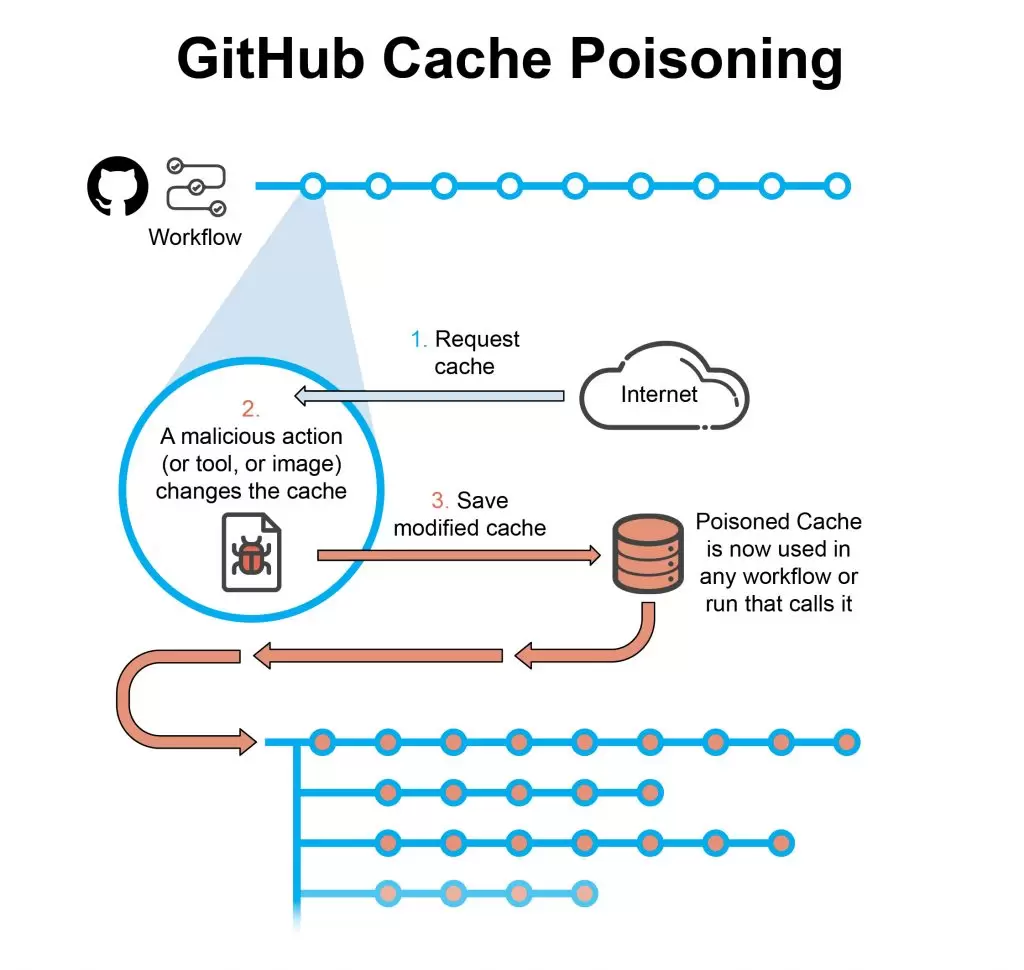

Cache/Dependencies Poisoning – CI/CD pipeline workflows are frequently used to specify particular actions that must be performed. Each workflow is made up of a series of one or more jobs, which are characterized as a sequence of actions. The majority of workflows do not share resources for security reasons. There are, however, workarounds for when it becomes necessary to share resources. A cache that all workflows can access equally is one such workaround. Since the cache is shared by multiple workflows, it only takes one infraction in a workflow with the authority to alter it for the cache to become poisonous for all subsequent workflow uses. A single poisoned cache may be active for a very long time, affecting countless iterations of software builds being run in that pipeline seeing as the cache is only updated when there is a new artifact or package to download.

Just like in the verification of the pipeline instruction file, you can use Valint to sign and later verify your cache or a folder containing all the pre-approved dependencies your pipeline requires. If you’re the paranoid type then allowing your pipeline to independently connect to the internet and download whatever libraries it deems are required is a surefire way to get more vulnerabilities and possible exploits into your final build.

SSH Keys – An SSH key is an access credential for the SSH (secure shell) network protocol. This network protocol is used for remote communication between machines on an unsecured open network. SSH is used for remote file transfer, network management, and remote operating system access. With SSH keys, for example, you can connect to GitHub without supplying your username and personal access token at each visit. You can also use an SSH key to sign commits. You can likewise connect other applications to your GitHub using SSH keys like BitBucket and GitLab.

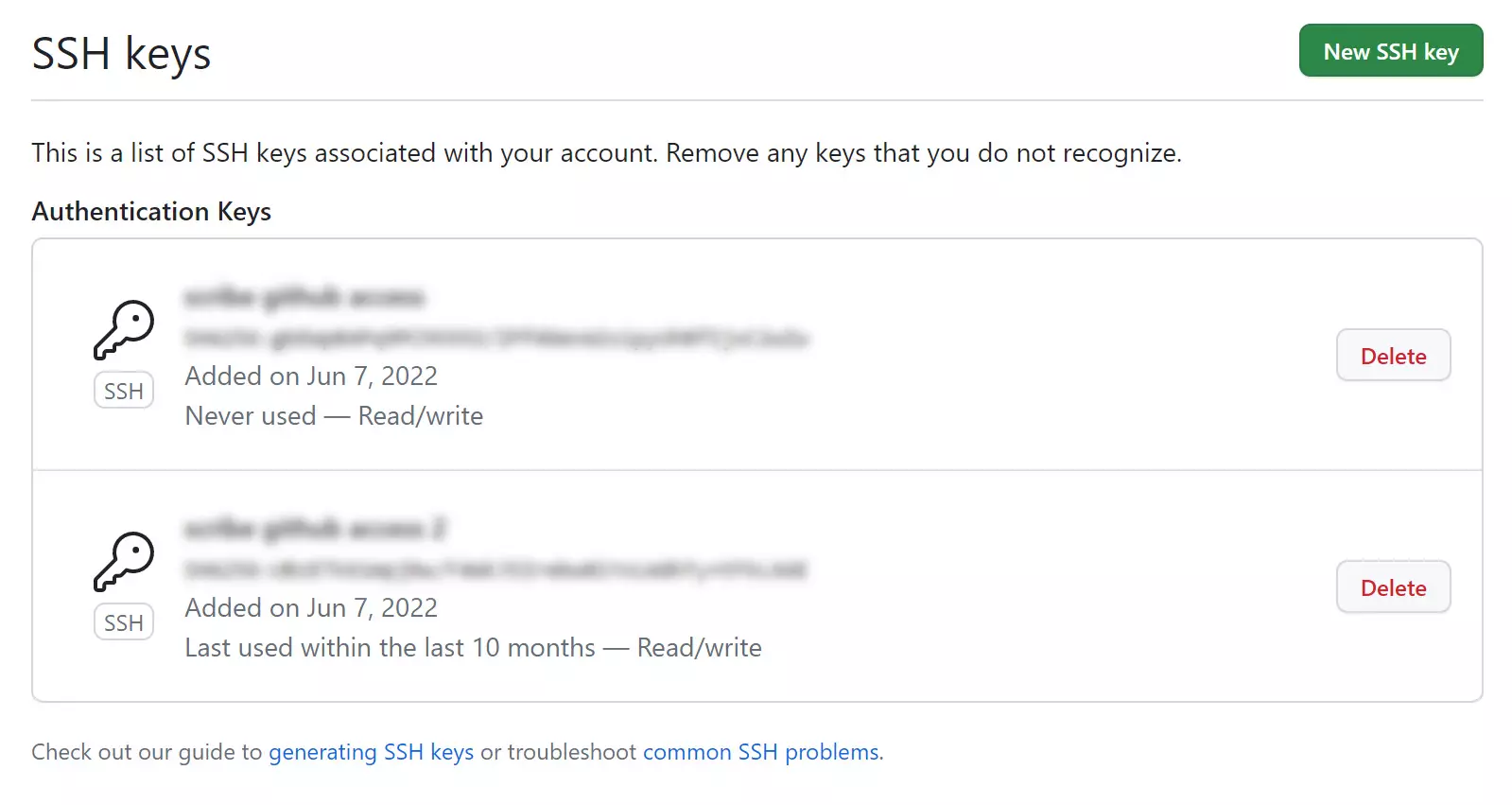

To maintain account security, you should regularly review your SSH keys list and revoke/delete any keys that are invalid or have been compromised. For GitHub, you can find your list of SSH keys on the following page:

Access settings > SSH and GPG keys

One tool that can help you keep on top of your SSH keys is an open-source security posture report called GitGat. The GitGat report will alert you if any of your configured SSH keys have expired or are invalid. On top of keeping a close eye on your keys and rotating them often, GitHub warns that should you see an SSH key you’re not familiar with, delete it immediately and contact GitHub Support for further help. An unidentified public key may indicate a possible security breach.

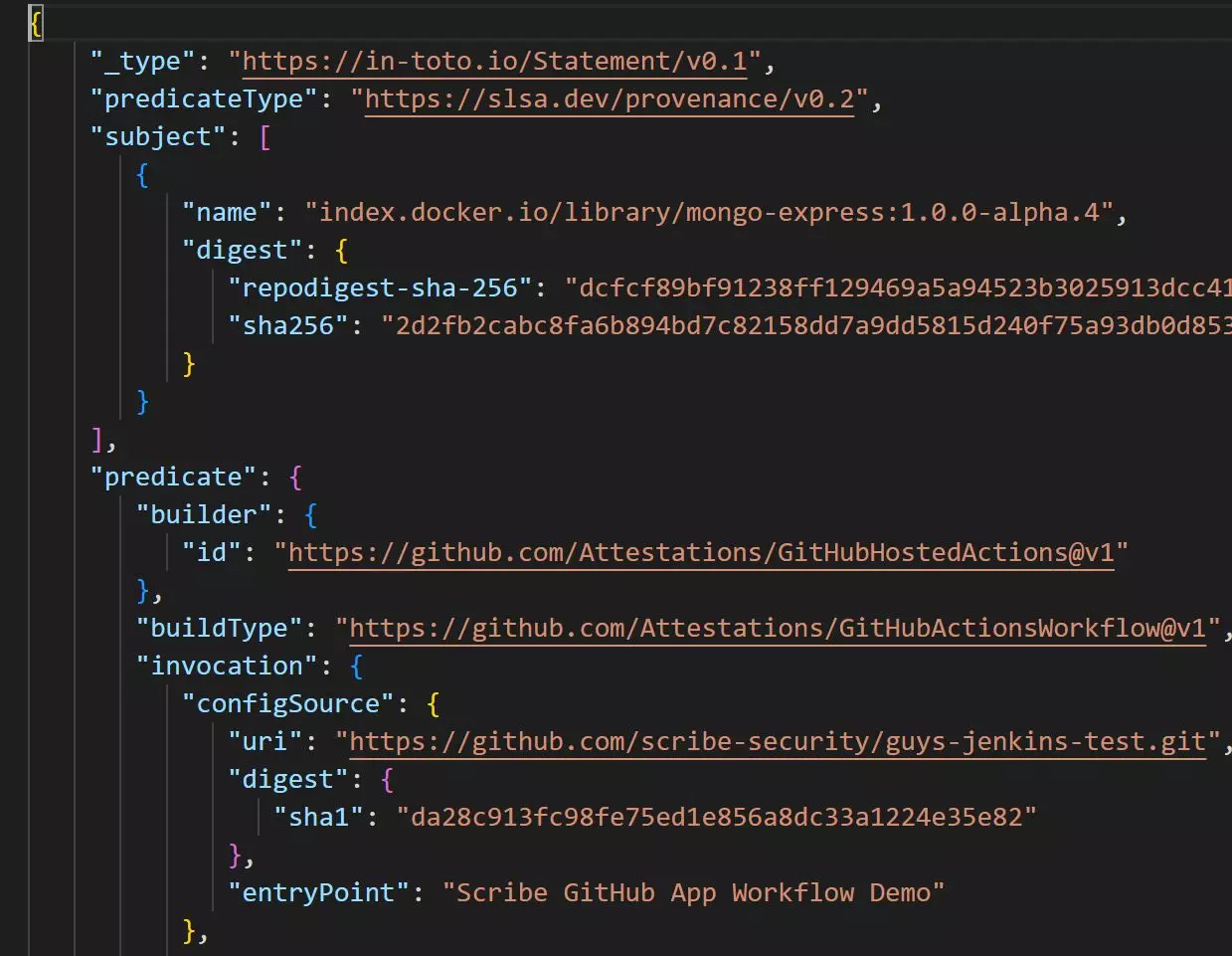

SLSA Provenance as an Immutable Pipeline Log – SLSA stands for Supply Chain Levels for Software Artifacts, which is a framework developed by Google and other industry partners to help improve the security and integrity of software supply chains.

SLSA defines a set of four levels, each of which represents a higher level of trust and assurance in the software supply chain. Each level is an increasing increment of security requirements. One important requirement is the need for file Provenance. For the SLSA framework,

Provenance is the verifiable information about software artifacts describing where, when, and how something was produced. Since most of the purpose of a CI/CD pipeline is to produce something (usually a build), then being able to track exactly which files went in and what happened to them is a sort of unfalsifiable machine log of the pipeline. For this purpose, it’s important that the SLSA provenance is created independently from any user. The integrity of anything that a user can interrupt or modify can be suspect.

One tool that allows you to create a SLSA provenance in your pipeline for a large variety of SCM systems is Valint (yup, the same tool from Scribe – it’s a very versatile tool). The link will show you how to connect Valint to your GitHub pipeline to generate a SLSA provenance for each build run on that pipeline. You can later access each provenance file and check it to see if anything untoward or unexpected happened. Here’s a snippet from such a provenance file:

The provenance file is just a JSON file but since there are no automated tools to read provenance files (yet) the job of reading and interpreting them falls to you.

Securing Your End Result – One of the most well-known software supply chain breaches in recent memory is the SolarWinds incident. In it, the hackers have modified some code in the build server so that each build being released from the company contained a secret backdoor. Another famous case of corrupting the end result can be seen in the Vietnamese Government Certificate Authority (VGCA) hack in 2020 dubbed Operation SignSight. The intruders infiltrated the VGCA website and redirected download links to their own, malware-laced, version of the software. In both cases, the end users had no way to verify that the software they got was the software that the producing company intended to release.

A simpler attack could be to substitute the final image built at the end of the pipeline for a malicious one and upload the bad image to wherever it needs to go. Since in most such attacks, the image is supposedly coming from the producing company then even if that company is protected by a valid certificate it’s not enough. It would just make the fake that much more believable.

Once again the solution is to cryptographically sign whatever the final artifact produced by the pipeline is and allow the end-user to verify that signature.

Since I already mentioned Valint I’ll suggest the use of the open-source Sigstore’s Cosign. Cosign aims to make signing easy by removing the need for key infrastructure. It allows the user to use their online verified identity (such as Google, GitHub, Microsoft, or AWS) as a key. You can use Cosign to both sign and verify images making it ideal for the signing and later verification of a pipeline’s final built image.

Whether you choose to use Valint or Cosign, allowing your users to verify a cryptographic signature on your final artifact to make sure they are getting exactly what you intended to deliver is an idea I’m sure most end users would appreciate.

Pipeline security in the future

There are, of course, other elements involved in a build pipeline that could benefit from added security. In this article, I chose to look at some of the more obvious and some of the more vulnerable pipeline elements.

Whatever pipeline tooling or infrastructure you’re using, make sure you keep your eyes open to the possibility of a breach. Never blindly trust any system that tells you it’s completely secure.

Considering the rising threat of identity theft, spear phishing, and other forms of falsifying legitimate access, we feel that the sign-verify mechanism is a good, versatile tool to have in your digital toolbox.

Whether you need to sign an image, file, or folder, I invite you to take a closer look at Scribe Security’s Valint as a one-stop shop tool for such needs.

This content is brought to you by Scribe Security, a leading end-to-end software supply chain security solution provider – delivering state-of-the-art security to code artifacts and code development and delivery processes throughout the software supply chains. Learn more.