Imagine the next board meeting. You, a security leader in your organization, will present your standard deck with risks, mitigations, and incidents. Then, one of the board members will ask: How are you preparing to protect the new AI technologies and the MLOps pipelines that the company is already using?

Here is your answer.

AI Brings With it New Risks

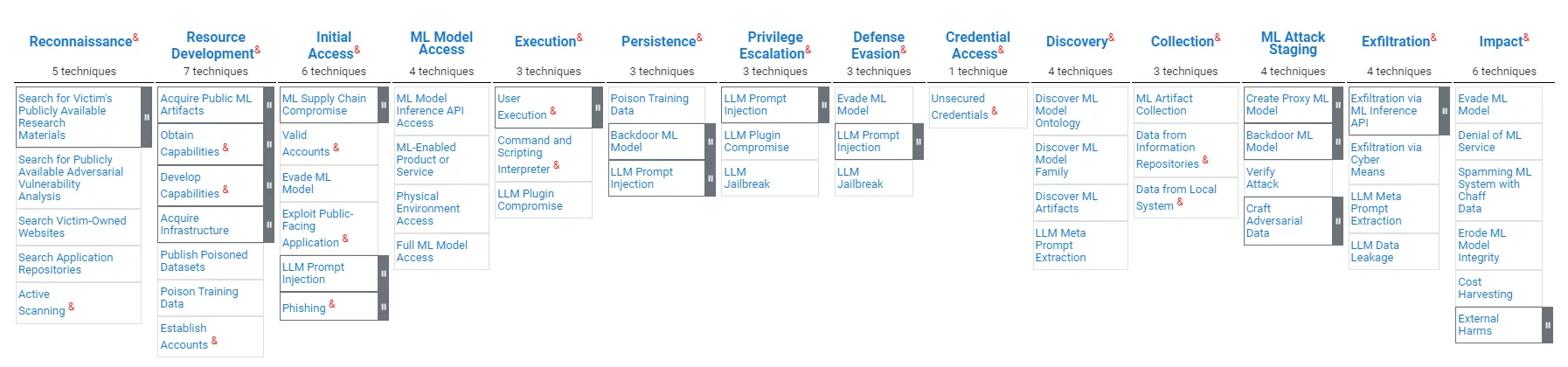

MLOps pipelines (sometimes also known as AI Ops), while akin to traditional data processing systems in their value to organizations, have distinct vulnerabilities. Attackers may aim to insert biases, manipulate model outcomes, or compromise data integrity or tools, aiming at undermining model reliability and skewing decision-making processes. ATLAS, a framework by the MITRE organization for MLOps protection, underscores the necessity for tailored security measures that address these challenges.

AI Will Bring With It New Regulations

The burgeoning field of AI and MLOps is under increasing scrutiny from regulators worldwide. In the absence of comprehensive federal legislation in the United States, guidance from bodies like the National Institute of Standards and Technology (NIST), such as the Artificial Intelligence Risk Management Framework 1.0, offers a glimpse into future regulatory frameworks. The framework emphasizes trustworthiness in AI systems, including the seven defined characteristics of trustworthy AI systems: safety, security and resilience, explainability and interpretability, privacy-enhanced, fair with harmful bias managed, accountable and transparent, as well as valid and reliable.

MLOps and Software Supply Chain Have a Lot in Common

The similarities between MLOps pipeline vulnerabilities and traditional software supply chain risks are striking. Both realms face threats of compromise aiming at undermining the integrity of the development process and the security of the final product. Maliciously modifying an LLM is quite similar to maliciously modifying a software dependency; maliciously modifying the software running the LLM is, in fact, a software supply chain attack; accountability, transparency, and trust requirements discussed in the AI world are exactly what stands behind the SBOM requirements in the software supply chain world.

The MITRE organization publishes cybersecurity models. MITRE has recently published the Atlas model for MLOps protection, which can be found here. An overview of the model is given below:

Just like in the ‘traditional’ cybersecurity domain, AI and MLOps regulations are still being developed. Following these emerging regulations would make it easier to protect MLOps existing assets as well as to attest to compliance of MLOps processes with existing and emerging best practices. Organizations will need to attest to their model’s integrity as well as to their model being unbiased.

There are Technologies That Will Serve Both Realms

Technologies that ensure data, code, and tools’ integrity can provide the required integrity controls for software supply chain security of both MLOps and DevOps.

Technologies that provide transparency and trust measures of software can provide similar values for MLOps.

Attestation-Based Supply Chain Security Technology

The concept of evidence-based software supply chain protection is simple: a software artifact should not be trusted unless there is enough evidence of its trustworthiness. Implementation of this concept involves evidence collection tools, a policy engine that evaluates the evidence to verify it, alerts of violations and recommendations for mitigations, and sharing mechanisms that allow transparency and collaboration. The in-toto framework is an academic example of such a solution. Scribe’s software supply chain platform is, among other things, a commercial manifestation of this technology and extended its technology to support the ML-Ops challenges.

Scribe’s evidence-based approach is agnostic to the specifics of the evidence; thus, the same technology can serve MLOps protection, for example:

- Ensuring software integrity and ML pipeline integrity.

- Ensuring the integrity of open-source dependencies and the integrity of the AI model.

- Evaluating SAST reports to ensure AI-specific testing-tools reports (e.g., biasing testing).

- Sharing SBOMs and policy evaluations, as well as MLBOMs and MLOps policy evaluations.

Scribe Security Software Supply Chain Technology for AI/ML-Ops

The MITRE ATLAS and Scribe’s Technology

Following is a mapping of Scribe’s current capabilities compared to the MITRE ATLAS attack map:

| Attack Stage | Techniques | Scribe’s Solution |

|---|---|---|

| Attacker Resource Development | Publish Poisoned Datasets Poison Training Data. | Data integrity: Attest to datasets consumed, and verify datasets source and content. Attest to training data, and verify training data content and source. |

| Initial Access | ML Supply Chain Compromise | Data and code integrity: Attest to data, models, software, and configurations of the ML pipelines. ML Pipeline Policy enforcement: Attest to actions and verify policies accordingly (e.g., release process kit, tests, access patterns) |

| Initial Access, Impact | Evade ML Model (e.g., crafted requests) | Accurate pipeline tracking: Track resources and detect anomalies of ML pipeline access patterns (FS-Tracker) |

| Execution | Command and Scripting Interpreter | Accurate pipeline tracking: Track resources and detect anomalies of ML pipeline access patterns (FS-Tracker) |

| Persistence | Poison Training Data | Data integrity: Attest to training data, verify training data content and source. |

| Persistence, ML Attack Staging | Backdoor ML Model | Data integrity: Attest to ML model lifecycle, verify on use. |

| Impact | System Misuse for External Effect | System Level Policies: Attest to system behavior and characteristics and apply policies accordingly (e.g., compute costs, access patterns). |

Following is a mapping of the MITRE Mitigations compared to Scribe’s technology:

| MITRE Mitigation ID | Mitigation | Scribe’s Solution |

|---|---|---|

| AML.M0005 | Control Access to ML Models and Data at Rest | Accurate pipeline tracking: Track resources and detect anomalies of ML pipeline access patterns (FS-Tracker) |

| AML.M0007 | Sanitize Training Data | Data integrity: Attest to and verify data used for training |

| AML.M0011 | Restrict Library Loading | Data and code integrity: Attest to and verify data model and code library loading. |

| AML.M0013 | Code Signing | Code integrity: Attest to and verify code used. |

| AML.M0014 | Verify ML Artifacts | Data and code integrity: Attest to and verify data model and code library loading. |

| AML.M0016 | Vulnerability Scanning | Vulnerability scanning, policy evaluation: Attest to the execution of tools such as vulnerability scanning. Evaluate policies regarding these attestations. Scan vulnerabilities based on SBOM attestations collected from the ML pipeline. |

Signing and verifying ML-Datasets and Models using Valint

Valint is Scribe’s powerful CLI tool for generating and validating attestations. Valint can be used to sign and verify ML datasets and models.

Example:

We want to use the HuggingFace model wtp-bert-tiny. In order to prevent compromising the model, we want to sign it and verify it before use. Creating an attestation (signed evidence) can be done with the following command:

valint bom git:https://huggingface.co/benjamin/wtp-bert-tiny -o attest

This command will create a signed attestation for the model’s repo. The attestation will be stored in an attestation store (in this case – a local folder) and will be signed (in this case – using Sigstore keyless signing).

A typical use of the model would be to clone the repo and use its files. Verifying the model’s integrity immediately after downloading can be done with the following commands:

git clone git:https://huggingface.co/benjamin/wtp-bert-tiny valint verify git:wtp-bert-tiny

Verification of the model’s integrity before each use can be done with the following command:

valint verify git:wtp-bert-tiny

Notes:

- A similar approach can be used to sign and verify datasets.

- One of the characteristics of ML models is their huge size. To avoid downloading and handling large files that are not needed, a best practice is to download only necessary files. This use case is supported by Valint, which supports signing only a specific folder or file.

Verifying Policies on ML Models

Scribe’s Valint is a powerful policy verification tool. One of the ways to manage risk is to enforce policies. In the following section, we shall demonstrate how to reduce risk by enforcing a licensing policy on ML models used.

Suppose we only allow the use of the MIT license in our project. Once configured, Valint can verify it:

valint verify git:wtp-bert-tiny -d att -c verify-license.yml

This command uses the verify-license policy that is defined as follows:

attest:

cocosign:

policies:

- name: ML-policy

enable: true

modules:

- name: verify-license

type: verify-artifact

enable: true

input:

signed: true

format: attest-cyclonedx-json

rego:

path: verify-hf-license.rego

The policy implemented in the verify-hf-license.rego file extracts from the signed attestation the HuggingFace model ID, pulls from the HuggingFace API information about the model, and verifies that it is MIT.

A similar flow can be used to verify licenses of open-source datasets.

Use Case: Protecting a Realworld ML-Ops Service

An ML-Ops service is part of an application that enables easy access to AI models; the service users only need to state their requests, and all the practicalities of accessing the ML model is done behind the scenes by the service.

Example:

We want to produce and use a service that exposes access to Microsoft’s “guidance” open source package (in simple words, this package enables better utilization of Large Language Models (LLMs) by running chains of queries instead of a single prompt).

The service will be a Docker image that contains the service code and the model. We will base our code on the Andromeda-chain project. The project wraps the guidance library with a service and builds a docker image with the application.

Following is the basic version of the Dockerfile:

FROM python:3.10

COPY ./requirements.cpu.txt requirements.txt

RUN pip3 install -r requirements.txt

RUN mkdir models \

cd models \

git clone https://huggingface.co/api/models/benjamin/wtp-bert-tiny

COPY ./guidance_server guidance_server

WORKDIR guidance_server

# Set the entrypoint

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "9000"]

It is quite straightforward; when building the docker, the code dependencies are installed, the model is installed, and the service code is copied to the Docker image.

Once the image is built, we can create a signed attestation of it with the following Valint command:

valint bom ml-service:latest -o attest

This command generates a signed attestation that contains a detailed SBOM of the docker image named ml-service.

This attestation can be used later for verifying the Docker image, using the following command:

valint verify ml-service:latest

This command verifies the integrity of the image – both the code and the ML model. The verification can be done each time the image is deployed, thus ensuring the use of a valid container.

Building a Protected ML-Ops Service

Combining the capabilities demonstrated in the previous paragraphs, we can now demonstrate how to protect the building of an ML-Ops service:

Prerequisite: Once a model is selected – create an attestation of it:

valint bom git:https://huggingface.co/benjamin/wtp-bert-tiny -o attest

Build Pipeline:

1. Verify the integrity and the license of the model in the build pipeline:

git clone https://huggingface.co/benjamin/wtp-bert-tiny valint verify git:wtp-bert-tiny -d att -c verify-license.yml

2. Build the docker and create an attestation of it:

docker build -t ml-service:latest . valint bom ml-service:latest -o attest

3. Before using the image, verify it:

valint verify ml-service:latest

This verification step guarantees that the image deployed is the one created with the verified model inside.

A similar verification can be performed before each deployment in Kubernetes using Scribe’s admission controller.

Recommendation

Investing in a software supply chain security product in 2024 that addresses both the immediate requirements of the software supply chain and the evolving demands of MLOps is a strategic choice.

Investing in an evidence-based solution with a flexible policy engine would allow future integration with emerging domain-specific MLOps security technologies as they mature.

Why is This a Scribe Security Blog Post?

You should know the answer if you read everything until here: Scribe provides an evidence/attestation-based software supply chain security solution with a flexible and extendable policy engine. For an elaborate use case of protecting an MLOps pipeline using Scribe products, press here.

This content is brought to you by Scribe Security, a leading end-to-end software supply chain security solution provider – delivering state-of-the-art security to code artifacts and code development and delivery processes throughout the software supply chains. Learn more.